🎶 Project ImproVision

Overview

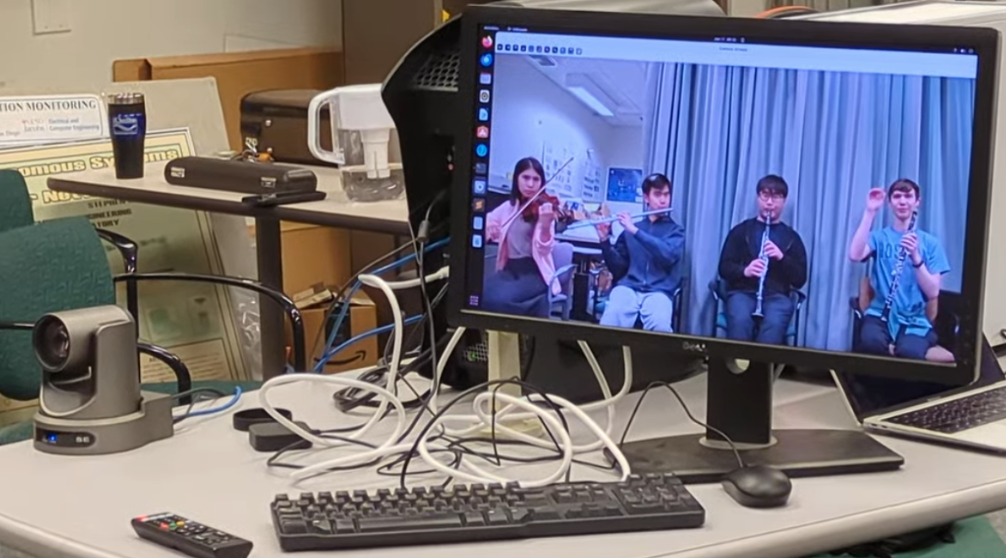

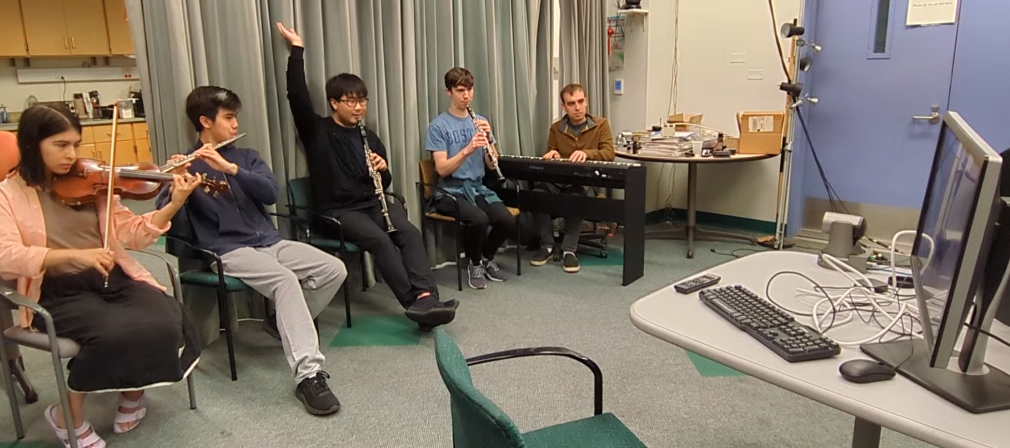

Project ImproVision investigates the future of human-robot interaction in musical performance settings. We’re developing a robotic conducting system that can interpret visual cues from musicians and respond with appropriate conducting gestures in real-time.

Technical Implementation

- Developed a camera-based robotic interface using Python for gesture detection

- Implemented real-time pitch analysis systems using C++

- Created an integrated system enabling dynamic real-time interaction between musicians and the robotic conductor

Key Achievements

- Led the development of the gesture recognition system

- Contributed to two research papers

- Check out our presentation from the ArtsIT Conference here!

Publications

- “Creativity and Visual Communication from Machine to Musician: Sharing a Score through a Robotic Camera” - EAI ArtsIT Conference 2024

- “ImproVision Equilibrium: Towards Multimodal Musical Human-Machine Interaction” (Under review)

Future Directions

We have multiple “interactive musical games” right now, which I’m working on combining into a full system demo in the coming months!